AI is not the engineer we wanted, but it might be the one we need

I love making fun of thought leaders, investors, and other folks who have no idea how software is built and operated—yet keep proclaiming that the software developer is dead. Every week, there’s at least one of these sprees on LinkedIn or in newsletters, where they go on and on about how some announcement is a game-changer that just wiped out an entire industry—from the Devin fiasco to breathless declarations that the singularity has arrived after watching a hackathon project demo.

As any seasoned software builder can tell you—and as we see every day when extraordinary claims fail to deliver extraordinary proof—AI still needs substantial, groundbreaking advancements to truly replace a software engineer. That said, it would be silly to ignore the fact that today’s AI technology is already powerful enough to disrupt huge parts of the software development, namely B2B SaaS, internal tools, and other frontends-to-a-database systems.

The easy narrative is that this disruption comes from the growing power and increasing capabilities of AI systems. I agree that AI is the tipping point here, but less because of its raw power and more because it’s the final missing piece in a picture we’ve been painting for two decades. Let me explain.

CRUD Before the Cloud

Until the late ’80s, domain-specific software applications were rare outside corporate, government, and research settings. If a business was computerized—which was uncommon to begin with—it typically relied on generic spreadsheets and simple database automations.

This changed in the ’90s. Walk into a computer store or flip through a magazine, and you’d find dozens of shrink-wrapped software packages catering to every niche imaginable—from video rental stores to law firms to astrology.

What’s interesting about this software is how similar it all looked. At their core, these applications were just forms and wizards for manipulating and querying a database. The user interfaces followed the same patterns—no remote clients, just local systems with barcode scanners and printers.

This Cambrian explosion of software was fueled by modern databases and third-generation programming languages like Visual Basic and Delphi. These tools were perfect for the era, simplifying CRUD operations on a local database and allowing developers to focus on business logic instead of low-level details. Small teams could build entire businesses by reusing the same architecture across different niches—after all, a video rental app isn’t that different from a bed-and-breakfast management system once you strip away the domain-specific details.

From Boxes to Browsers

As the ’90s ended, Salesforce and others began promoting the “no software” movement—a clever marketing stunt aimed at disrupting traditional CRM systems. In doing so, they helped unleash Software-as-a-Service (SaaS), which brought massive advantages for both users and software producers. SaaS quickly became the dominant model for building and distributing software, making anything else a rare exception.

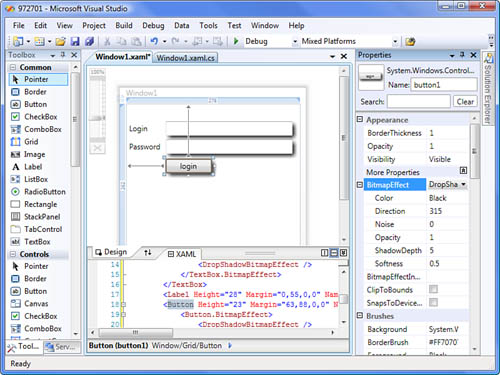

But web development was an entirely new discipline, requiring fresh ways of thinking and writing code. Companies that had thrived in the desktop era tried to transplant their models to the web, with many failed attempts—ranging from Sun’s JavaServer Faces and Portlets to Microsoft’s Web Forms.

Ultimately, these desktop-like development experiences were relegated to corporate IT departments, where technology was seen as a cost center rather than a core business function. Every successful tech company—even those using Microsoft’s .NET—had to abandon this thinking and develop a new understanding of how to build scalable, user-facing web applications.

By the late 2000s and early 2010s, building web products required solid engineering skills. Developers had to understand everything from securely authenticating users to deploying without downtime—and most of us were learning on the job.

The rise of productivity-focused frameworks like Ruby on Rails reshaped web development culture, pushing developers toward maximizing code reuse. In the early Web 2.0 era, relying on third-party solutions for user authentication, payment processing, or inventory management was seen as heresy. But Rails changed the game.

The Rails culture has always been about experimentation and challenging the status quo—all in the name of productivity. From the start, Rails developers eagerly delegated as much as possible to libraries and external services. And as Rails became the backbone of every cool startup in the early 2010s, this mindset didn’t just gain acceptance—it became the hallmark of a high-caliber developer.

In true Startupland fashion, the growing reliance on third-party components quickly became an opportunity for new products and services. Companies like Stripe, Auth0, Twilio, SendGrid, and Algolia began offering these as APIs developers could integrate effortlessly. Meanwhile, developer-focused infrastructure providers like Render, Timescale, and Vercel made infrastructure worries obsolete—even at scale, handling millions of users and terabytes of data.

There and Back Again

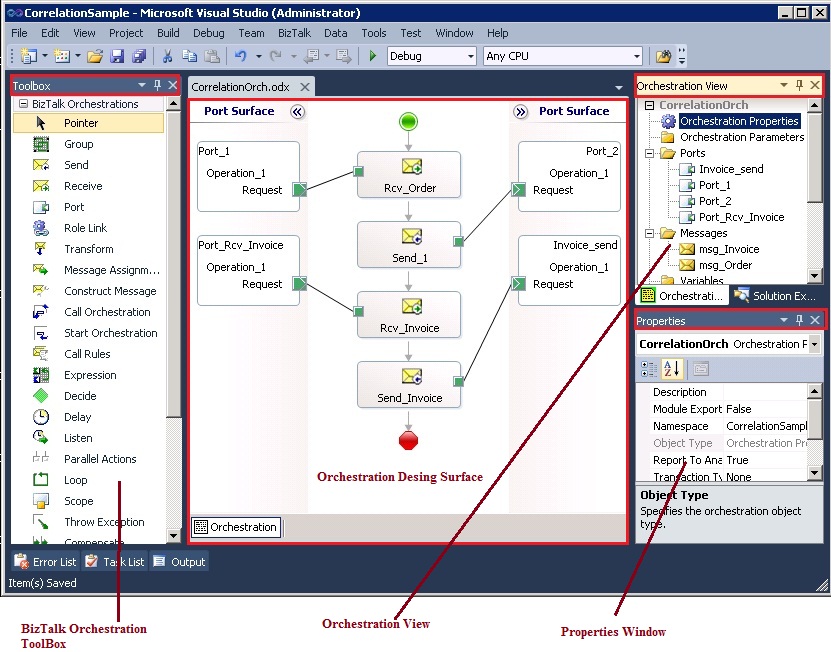

And this brings us full circle to what application development was like in the ’90s. From the late 2010s to today, building B2B SaaS applications has largely become an exercise in gluing together open-source and SaaS components with custom business logic to orchestrate them. Application architecture has been heavily commoditized, and today’s developers are mostly selecting the right components off the shelf and wiring them together to meet business needs.

Look inside any software team—from Google to a five-person startup—and you’ll see that this is exactly what most developers do all day. The hardest parts of application development are delegated to open-source, SaaS, or internal platform components, while developers focus on business logic and user experience.

This pattern raises an obvious question: if application developers are mostly translating business rules into code without much engineering heavy lifting, why can’t the business expert—the one who already knows the rules—do this work directly? How do we eliminate the translator?

Many have tried to do exactly this in the 90s, using everything from natural language programming to drag-and-drop tools—and it’s striking how much these past attempts resemble the “AI Agent development tools” we see today.

Despite companies pouring incredible amounts of money into tools that promised to take the application developer out of the equation, this dream has never fully materialized for two key reasons:

- Learning curve trade-offs: While these tools remove technical jargon, they introduce their own systems that must be learned and mastered. Worse yet, unlike programming languages, knowledge of one proprietary tool rarely transfers to another.

- Power vs. simplicity balance: Simplification inevitably comes at a cost—these tools sacrifice power and flexibility. They excel at orchestrating existing components, but implementing anything beyond basic logic (even moderately complex conditional chains) becomes cumbersome. Users are limited to recombining prebuilt components, with little ability to create truly custom solutions.

The core issue was that these tools weren’t truly translating between business and code—they created an entirely different development platform that users had to learn and depend on, without offering anywhere near the power and flexibility of just writing code.

AI Over Convention Over Configuration

Generative AI has a real opportunity to reshape software development—not by replacing engineers outright, but by bridging the gap between business logic and implementation.

Thought leaders on LinkedIn love to proclaim that AI will replace all engineers within the year. If we’re talking specifically about B2B SaaS applications and internal tools, I can see a version of this happening. Not because today’s AI models are competent software engineers, but because we have built a world where you no longer need to be one to create these kinds of applications.

With the right set of reusable components, even a year-old AI model can functionally perform the job of a mid-level SaaS developer. The challenge is not raw capability, but finding the right way to direct these AI systems. Chatbots and AI agents that communicate in natural language feel inefficient and unnatural for structured development. AI also frequently misuses APIs, calls nonexistent functions, or confuses component versions, making it unreliable for production work.

This opportunity feels a lot like what Heroku did for cloud computing—but even bigger. Instead of simplifying infrastructure through a system of conventions, AI enables application development itself to be more accessible. There are still many unanswered questions, but if I were running a developer-focused platform like Render or DigitalOcean, this would be my top priority.

Over the past 15 years, we have built the technology, ecosystem, and culture that allow application engineers to delegate most complex and undifferentiated work to pre-existing components. The infrastructure is already in place. Generative AI is arriving at exactly the right moment to take advantage of it.